How Deep Learning Works?

How Deep Learning Works?

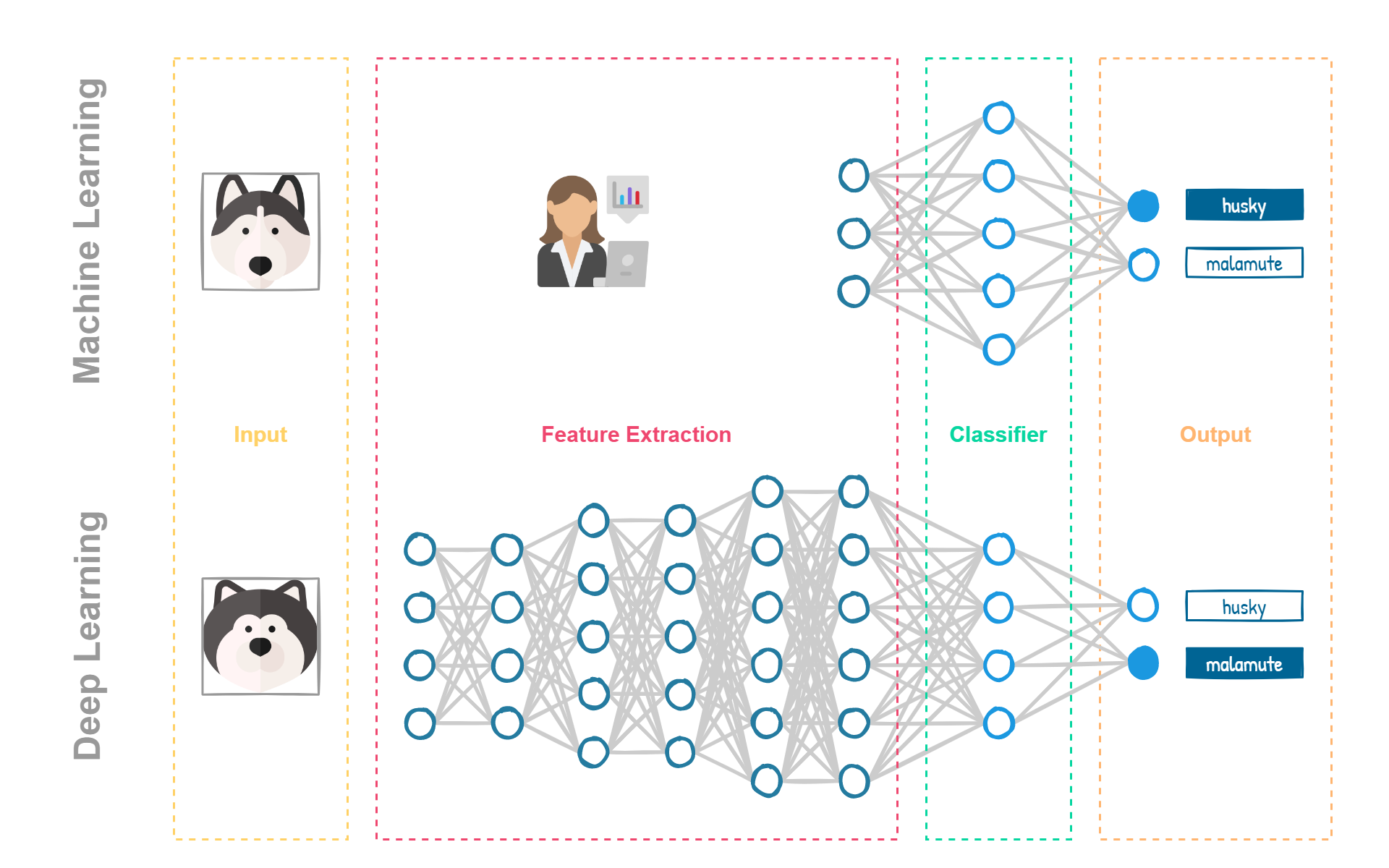

Deep Learning works by building a network of artificial neurons that process information sequentially. Each layer of the network is responsible for extracting higher-level features from the raw data, and the final output is generated by combining these features. This approach is often referred to as “deep” because of the many layers involved.

📊 We fed the network with a large amount of data to train a Deep Learning model. The model then makes predictions based on this data, and the accuracy of these predictions is used to adjust the weights of the artificial neurons.

This process is repeated many times, allowing the model to improve its accuracy continuously. One of the key benefits of Deep Learning is its ability to automatically identify patterns and features in data without being explicitly told what to look for.

This makes it well-suited for tasks such as image and speech recognition, natural language processing, and autonomous decision-making.

“In this context, learning means finding a set of values for the weights of all layers in a network, such that the network will correctly map example inputs to their associated targets.” — Deep Learning with Python, François Chollet.

Unlike other algorithms, most neural networks require a long training in which they try to find the parameters that allow them to extract the best features and obtain the most similar results to those expected.

The number of parameters of a neural network can go from hundreds to billions (GPT-3’s full version has a capacity of 175 billion machine learning parameters). Due to this, training time is way bigger than traditional algorithms. Unlike traditional Machine Learning algorithms, neural networks require much more time to train and can get stuck. 🔥

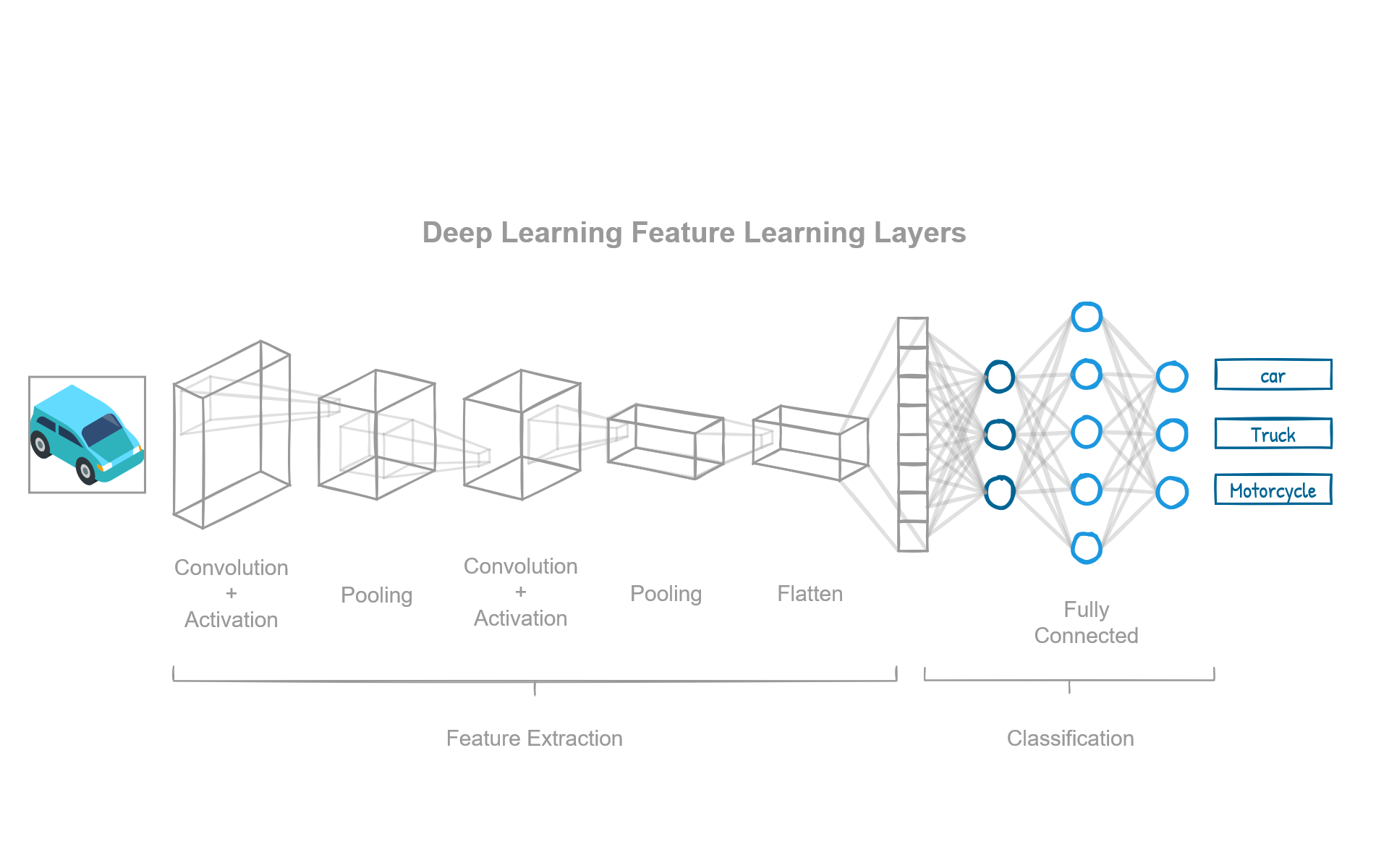

For example, assume the input data is an image (or matrix of pixels). The first layer typically abstracts the pixels and recognizes the edges of features in the picture. The next layer might build simple features from the edges, such as leaves and branches.

The next layer could then recognize a tree, and so on. The data passing from one layer to the next could be seen as a transformation, turning the output of one layer into the input for the next one.

Each layer has a different level of abstraction, and the machine can learn which features of the data to place in which layer/level on its own. 📈